ARF Can Dance!

So of the many things I have been busy with, I have been working on a fun custom Animation for my Aibo ERS-7, ARF!

This took me way too long to make. #ChipiChipi #aibo pic.twitter.com/d9I7FIiRa8

— Rami-Pastrami (@rami_pastrami) January 2, 2025

If you want to read about my first general impressions with messing with this classic robot, I suggest checking out my previous blog here. The TL;DR is that the robot is cool, albeit it's from ~2003, and thus the software best runs on windows XP (you can technically run it on newer versions, but there may be issues which I wanted to avoid, so I went the VM route). In addition, there are programming capabilities on the ERS-7 that I wished to explore.

But first, I wanted to try making a standard animation for my AIBO, and of course I had to settle on Chipi Chipi Chapa Chapa, because why not? And thus started my adventure.

Actually before going any further, I want to thank the maintainers of the sites DogsBodyNet and AiboHack for keeping their sites up for all these years, and being an amazing resource for people like me, thank you! I would also like to thank the RobotCenter discord as well for being a awesome (and still active) community!

Now, to animating!

Creating the Animation (and Who Needs "Reverse Kinematics" Anyways?)

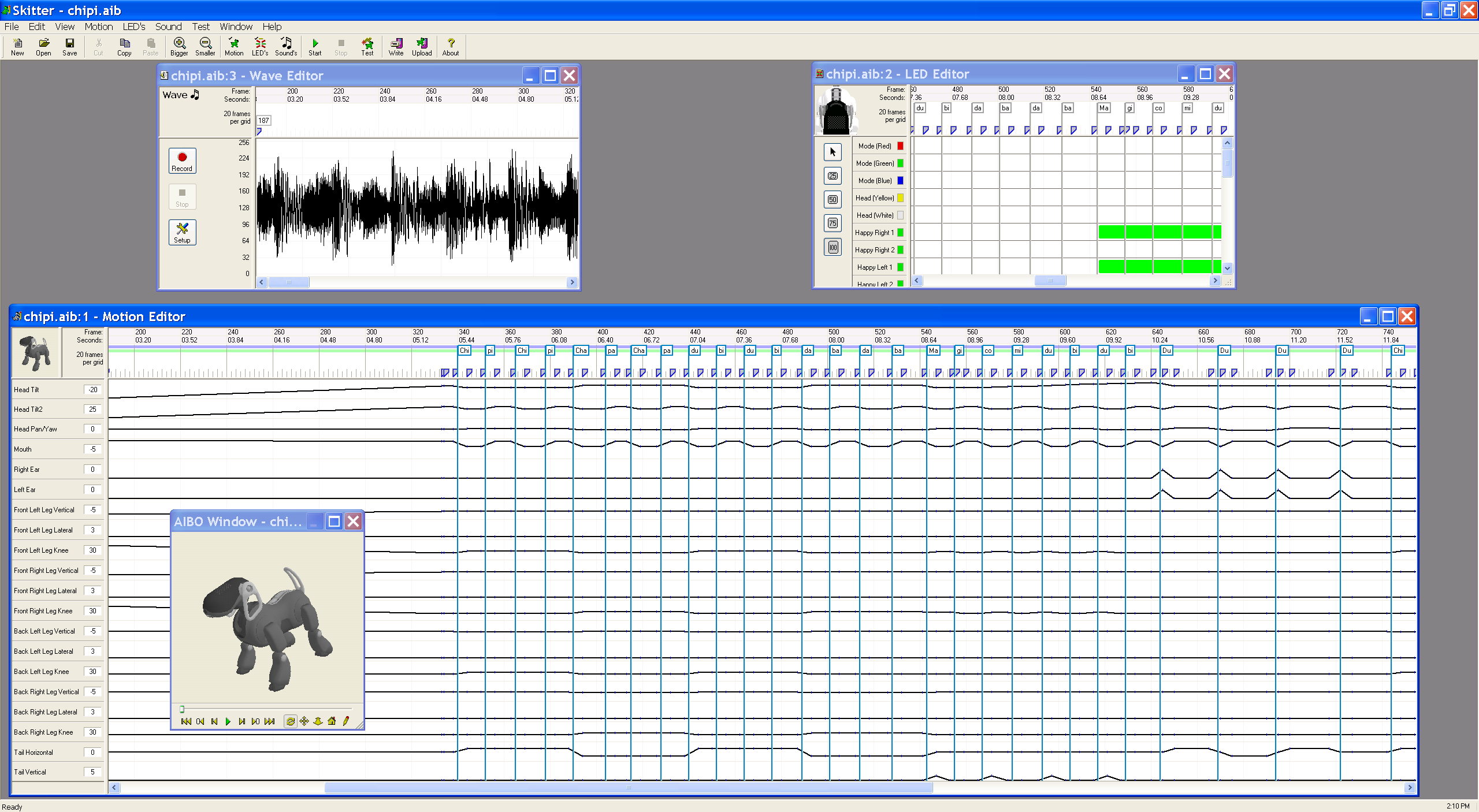

While from my understanding Sony did have animation tools for the ERS-7, I couldn't find them and they likely weren't very good. Instead, I went with the community made Skitter project.

Skitter ended up being my Swiss Army knife for creating the animation, as it combined the sound, LED, motion editors, and a preview into a single workspace. It even contained the tools required for uploading the animations to the memory stick and for testing out animations via Wi-Fi (though more on that later).

The first order of business was the audio. A lot of older Aibo models actually can only play Midis, however the ERS-7 breaks new ground by support WAV files at up to 8Khz, 8 bit, on a single channel! Truly incredible! (OK but lets be real, it's not like you are going to be using the Aibos small speakers to be listening to your FLAC album anytime soon anyways). Now while Skitter does actually have some basic sound editing features, for this I did end up using Audacity to take the original audio and trim it down. The only thing I used Skitters audio editor for is to position the start point of the music.

The next step was motion editing. Now given this is a physical robot, skitter puts in certain checks to ensure you aren't doing anything stupid, namely creating impossibly fast motor movements (as it labels them red and prints tons of warnings).

Now you may be noticing all the lines of the motion editor. Those are essentially the timeline of the angle value of each motor. Now this is fine to have and rather standard, the problem is that it is the only way to animate the robot. Granted, there are some helpers with dragging select and the ability to copy paste a windows of time, but to be frank this is a very painful and slow way to animate. This process took 3 weeks of my free time and while toward the end I certainly was getting better at it, I still believe my final result is stiff. I look at tools like Blender and hell even stuff I was doing in Garry's Mod (anyone remember Stop Motion Helper?) and just wish I could grab the preview and animate joins with dragging and reverse-kinematics.

In the end, what worked for me was using the comment feature to label every syllable of the song as best as I could. Then I added a blank keyframe slightly before and after those comments in which when the motor movements would occur. This made my process a lot easier once I figured it out, but again the results do look a bit unnatural.

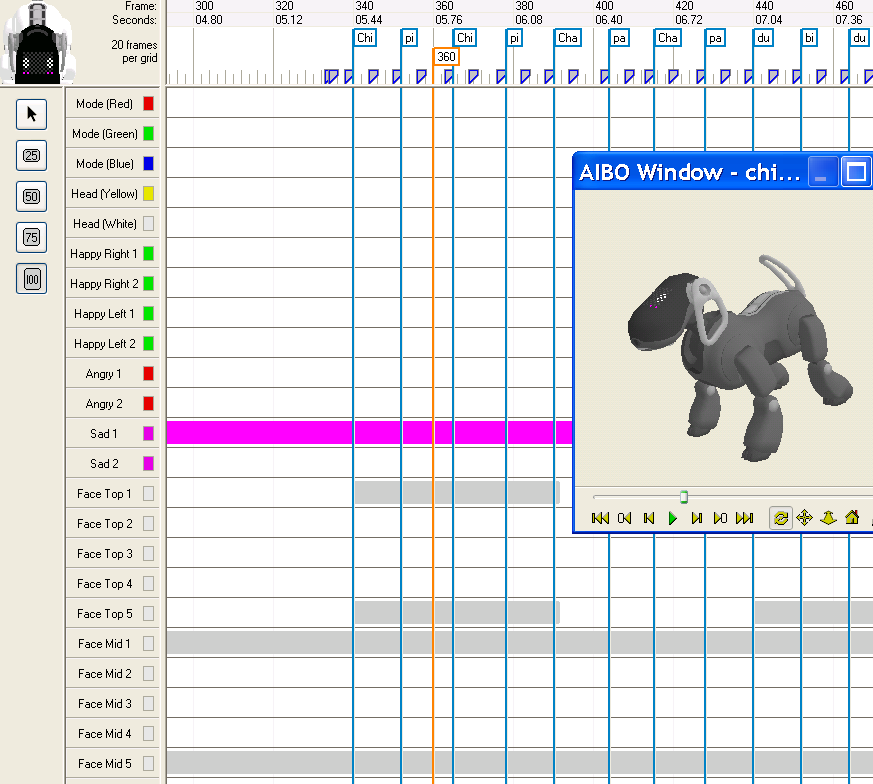

The LED editor was quite similar in concept, but instead of lines you draw boxes along certain LEDs. Despite first appearance, the ERS-7 does not have an addressable RGB matrix on its head, rather they are set color LEDs in certain regions that can only light up in specific colors. Does limit you quite a bit.

Thankfully the comments carry over from the motion editor, so that made my life a lot easy. I actually completed this section very fast.

I actually did have an unfortunate bug though. It seems certain lighting regions are mixed up during export. Here I try using the pink "sad" LEDs to try to indicate blushing in the Aibo, and it shows up as expected in the preview. However on the physical robot, it seems to be firing the red "angry" LEDs up top instead. Odd.

But regardless, overall I was quite happy with my experience, given the era this software came out in (windows XP supported had to be added to a later release, this is THAT old!). I never had corruption, no crashes, everything was stable. The application generally used like 13 mb of RAM (that's right, 13 MEGABYTES). It's a true testament of how good software engineers had to be on optimization to work on these old machines. Today, someone trying to make a similar application would probably make something in Electron and use 2 gigabytes of RAM. For the menu screen. So yeah, thanks to the original makers of this great software!

(this window scrolls)

And so, with my animation complete after a lot of hard work, it was time to get ARF to dance!

Teaching a Very Old Dog, Very New Tricks

Getting the animation on ERS-7 was a challenge.

Lets talk some background information. The classic Aibo series store most of their programming (as well as save data, AKA their "personalities") on Sony Memory Sticks.

Now Aibo Memory Sticks not only had fancier labels, they also had some fancier labeling making it non-trivial to simply copy their code on blank generic sticks, but you could still generally read/write to them as a USB stick.

And that's what I tried at first. I took ARFs included stick branded with AiboMind 3 "OS", and using the Clie PDA had it show up on the XP VM as a USB flash drive. I took a backup of the file structure of the USB stick, and completed my actual animation 3 weeks later. To write the animation onto the stick, I used an included tool of Skitter called SAMM which can be used to replace the built in dances of the AiboMind with the ones you created. Unfortunately they didn't work and ARF failed to boot. I had to roll ARF back to their previous backup, technically giving ARF an amnesia of the last 3 weeks. Sorry ARF!

(By the way, did I mention during this time window I just recently finished playing SOMA for the first time? That totally was not passing through my mind through all of this!)

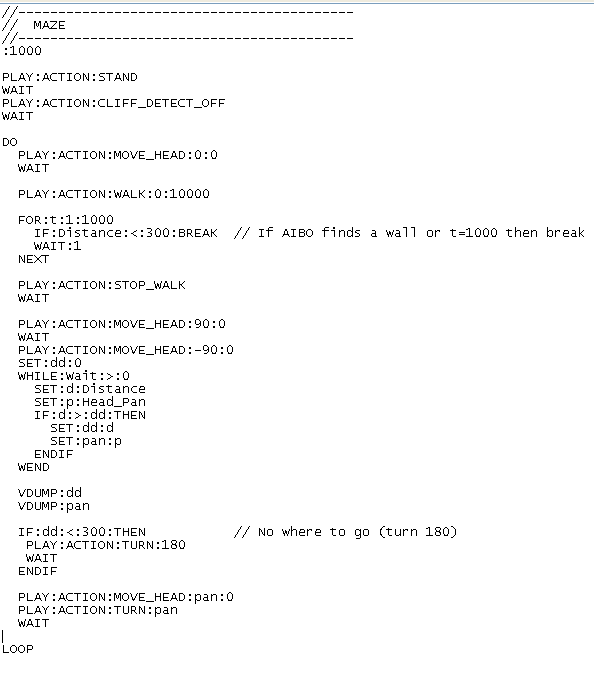

So turns out I had poor luck with modifying the stock system. Turns out, however, that users can create their own entire programs for AIBO instead. This is primarily done via programming with R-CODE (or OPEN-R for lower level access). So how does R-CODE look like?

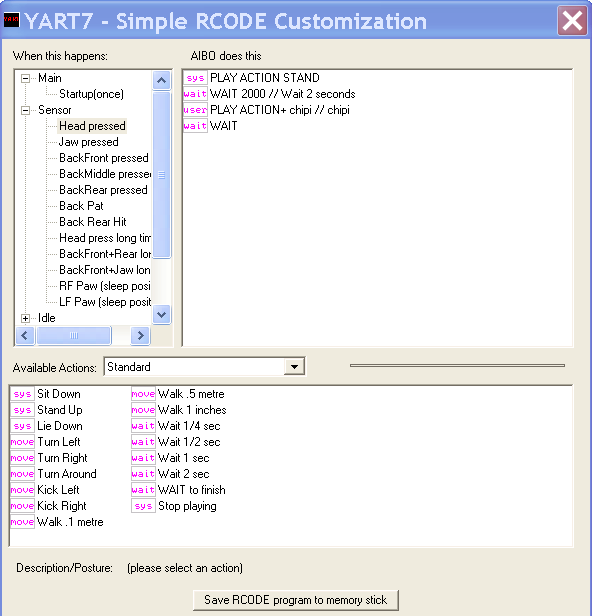

Alright, maybe it wouldn't be that bad, and honestly I will explore this further down the line anyways. Meanwhile however, there was another system I was more interested in: YART. Yart is essentially a very basic R-Code program that can be configured using an included windows program. Essentially all I need is a program to launch an animation, so this would be perfect.

But it doesn't matter what route I took, because the AiboMind stick is "locked" and will not run R-Code freely. To run R-code on an Aibo, Sony required you to buy "special" pink memory sticks. I looked into getting one, and I found a few being sold online, for a few hundred dollars.

Thankfully, someone else was fed up with that arbitrary limitation, and created a program called StikZap, which essentially makes any cheap generic Sony Memory Stick look like one of the programming pink ones. Unfortunately, it only runs on Palm PDAs (that have a Sony Memory Stick slot, so pretty much only Clie PDAs). Thankfully, I was already far ahead into this research previously, hence why I did buy a PDA in the last blog post that also doubled as a USB reader for the memory stick as well. I installed StikZap, and after skipping a few windows complaining about my screen resolution, managed to "zap" my generic 128mb Sony stick and ARF can't tell the difference!

After that, I grabbed YART and set up a very simple automation for having the AIBO play the animation after I tap the head.

Also apparently YART can work alongside Skitter to allow you to actually test your animations on the AIBO over Wifi. Honestly I wish I set this up earlier.

Anyways, after getting this setup, all that was left to do was to give it a test, and it worked flawlessly from the first try! I took the video of ARF grooving it out, and the rest was history!

So What's Next?

Well, first off I returned ARF to his Aibomind stick, since that's where his artificial soul is and all. YART is cool and certainly a great tool for me to test stuff like this, but ultimate its simplicity also limits its functions greatly as well.

How did I feel about the animation? Honestly, I am happy that I went through with it from start to finish. And as grateful as I am to the people behind Skitter for enabling me to do this, I don't think I want to make more animations with that limited motion editor.

However, I may not have to. See, the awesome guys there actually put out documentation on the byte structure of how their save files work (these devs cannot stop collecting W's even decades later). Honestly, I have been wanting to try my hand at making a plugin for Blender for a while, so it may make sense for me to create a blender plugin for animating the ERS-7 with all the modern animation creature-comforts, have it export a save file for Skitter to use to run its sanity checks on, and to export for the AIBO to use.

Past that? Well I did do a little bit of poking around with YART installed. Turns out YART as an R-Code program enables telnet on the AIBO, allowing the AibNet tool to connect to it. Aibnet is essentially a remote IDE environment for the AIBO, again made by many of the same people behind Skitter. I played around with it a bit, but didn't make anything serious. However, I do think I will cycle back to this at a future point. I would love to build one day is an R-Code a client system on the AIBO, essentially turning the robot into a streaming puppet over WiFi, and having a program on a modern PC with modern compute abilities actually handle all the decision making. I actually did find an attempt at this idea in the past, but it seems long abandoned.

But for right now I am jumping back into VR and Neuroscience projects. See you in 5 months for my next monthly update, and Happy New Years till then!